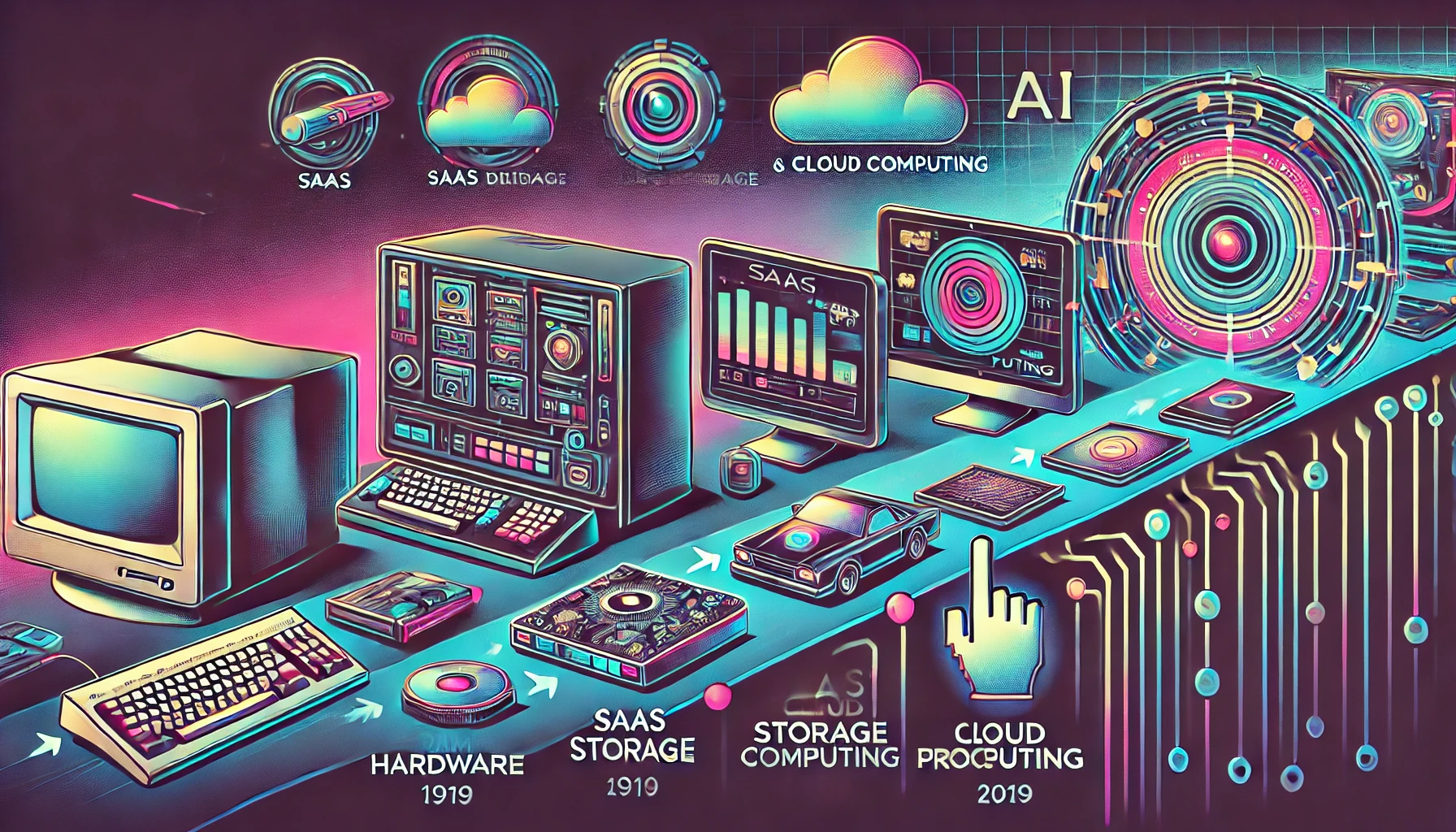

For decades, computing power has been measured in RAM, storage capacity, and processor speed (MHz/GHz). These were the key metrics that determined the performance of personal devices, from desktop computers to smartphones. But the future of computing is shifting away from local hardware limitations toward cloud-first, AI-driven infrastructure.

In the next decade, hardware specs will become irrelevant to the average user as computing moves into distributed cloud networks, AI processing units, and real-time streaming of computation.

1. The Decline of Local Computing

Right now, most devices still rely on local memory, local storage, and local processing power. But these constraints are rapidly disappearing due to:

✅ Cloud computing dominance: More applications are running directly from the cloud, reducing reliance on local resources.

✅ AI-driven processing: Neural networks and AI models are performing tasks dynamically, offloading computation from personal devices.

✅ Edge computing & decentralized AI: Instead of local processing, smart systems will distribute workloads across multiple cloud nodes for efficiency.

This means the need for powerful local hardware will decrease, and users won’t need to worry about RAM or storage upgrades anymore.

2. Processing Power as a Cloud Service

In the future, users won’t be limited by their device’s MHz/GHz. Instead, they will rent computation dynamically from powerful cloud-based AI models.

🔹 Streaming computation – Just like how gaming has moved to cloud-based services like GeForce Now and Xbox Cloud Gaming, everyday computing will follow.

🔹 AI-driven OS – Instead of running heavy applications on a local device, AI-native operating systems will manage tasks via real-time cloud execution.

🔹 Quantum and AI acceleration – Instead of upgrading processors, users will tap into advanced quantum and AI computing resources on demand.

No more needing faster CPUs—your AI assistant will allocate just the right amount of computing power whenever needed.

3. Storage Becomes Infinite and Invisible

The future of storage won’t be measured in GBs or TBs—it will be entirely fluid and dynamic.

🔹 Persistent AI Memory – AI models will remember context across sessions, eliminating the need for users to store files manually.

🔹 Decentralized Data Layers – Instead of saving files locally, data will exist across cloud nodes and be retrievable from anywhere.

🔹 Context-Aware Access – Devices will dynamically pull in only the data needed for a specific task, in real-time.

The concept of “storage space running out” will cease to exist.

4. The Death of Device Upgrades

For decades, the tech industry thrived on incremental hardware upgrades—but that model is dying.

✅ Instead of upgrading hardware, users will upgrade their cloud computing plans.

✅ AI models will optimize computing resources dynamically, making personal hardware specs irrelevant.

✅ Even low-end devices will perform like supercomputers because all real processing happens in the cloud.

The New Computing Paradigm: Intelligent, Cloud-Native, AI-Optimized

In this future, people won’t ask, “How much RAM does my device have?” They’ll simply use AI-powered systems that allocate resources as needed.

🚀 Processing speed won’t be about GHz—it will be about how fast your AI agent retrieves and executes cloud-based computation.

🚀 Storage won’t be about TBs—it will be about context-aware access to infinite cloud memory.

🚀 Upgrades won’t be about new hardware—it will be about connecting to smarter, more efficient AI services.

The Bottom Line?

The era of hardware-defined computing is coming to an end. The future belongs to AI-driven, cloud-powered, dynamically allocated intelligence.